Statistics Formula Sheet

Author:

Elizabeth McDonald

Last Updated:

5 лет назад

License:

Creative Commons CC BY 4.0

Аннотация:

Formula sheet for a statistics course

\begin

Discover why over 25 million people worldwide trust Overleaf with their work.

\begin

Discover why over 25 million people worldwide trust Overleaf with their work.

\documentclass{article}

\usepackage[landscape]{geometry}

\usepackage{url}

\usepackage{multicol}

\usepackage{amsmath}

\usepackage{esint}

\usepackage{amsfonts}

\usepackage{tikz}

\usetikzlibrary{decorations.pathmorphing}

\usepackage{amsmath,amssymb}

\usepackage{colortbl}

\usepackage{xcolor}

\usepackage{mathtools}

\usepackage{amsmath,amssymb}

\usepackage{enumitem}

\makeatletter

\newcommand*\bigcdot{\mathpalette\bigcdot@{.5}}

\newcommand*\bigcdot@[2]{\mathbin{\vcenter{\hbox{\scalebox{#2}{$\m@th#1\bullet$}}}}}

\makeatother

\title{STAT 251 Formula Sheet}

\usepackage[brazilian]{babel}

\usepackage[utf8]{inputenc}

\advance\topmargin-.8in

\advance\textheight3in

\advance\textwidth3in

\advance\oddsidemargin-1.45in

\advance\evensidemargin-1.45in

\parindent0pt

\parskip2pt

\newcommand{\hr}{\centerline{\rule{3.5in}{1pt}}}

%\colorbox[HTML]{e4e4e4}{\makebox[\textwidth-2\fboxsep][l]{texto}

\begin{document}

\begin{center}{\huge{\textbf{STAT 251 Formula Sheet}}}\\

\end{center}

\begin{multicols*}{2}

\tikzstyle{mybox} = [draw=black, fill=white, very thick,

rectangle, rounded corners, inner sep=10pt, inner ysep=10pt]

\tikzstyle{fancytitle} =[fill=black, text=white, font=\bfseries]

%------------ Measures of Center ---------------

\begin{tikzpicture}

\node [mybox] (box){%

\begin{minipage}{0.46\textwidth}

\begin{tabular}{lp{8cm} l}

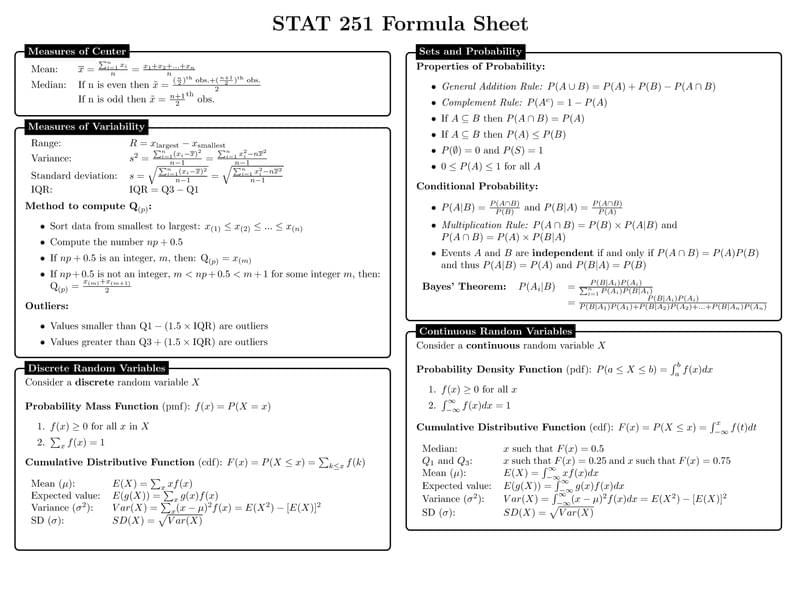

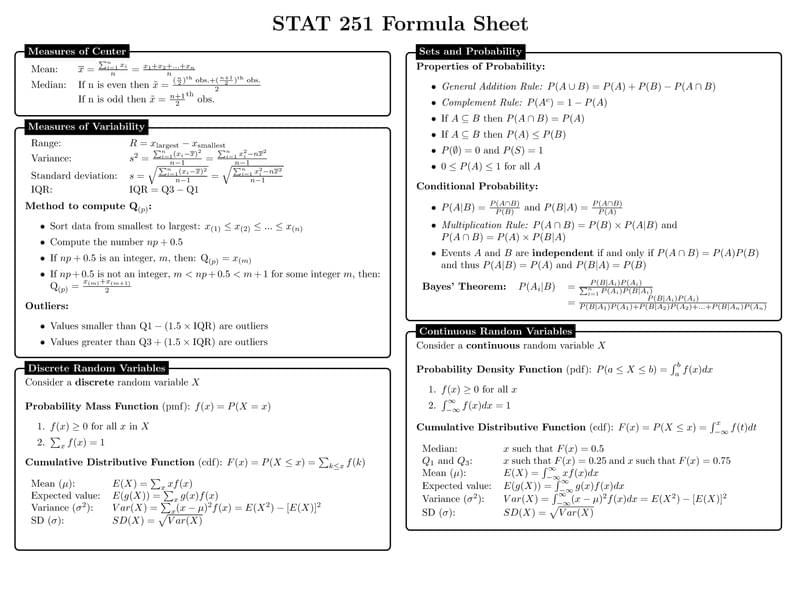

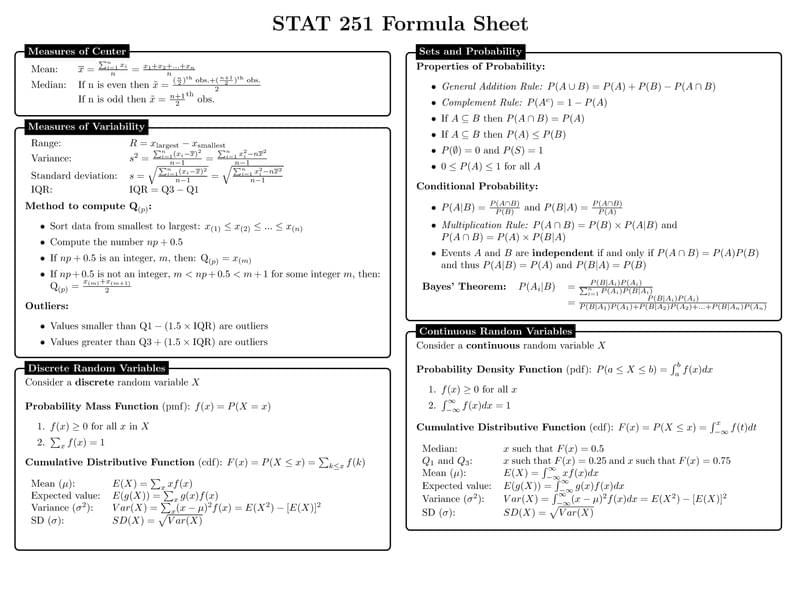

Mean:

& $\overline{x} = \frac{\sum_{i=1}^{n}x_i}{n} = \frac{x_1 + x_2 + ... + x_n}{n} $ \\

Median: & If n is even then $ \tilde{x} = \frac{(\frac{n}{2})^\text{th} \text{ obs.} + (\frac{n+1}{2})^\text{th} \text{ obs.}}{2} $ \\

& If n is odd then $ \tilde{x} = \frac{n+1}{2}^\text{th}\text{ obs.}$ \\

\end{tabular}

\end{minipage}

};

%------------ Measures of Center Header ---------------------

\node[fancytitle, right=10pt] at (box.north west) {Measures of Center};

\end{tikzpicture}

%------------ Measures of Variability ---------------

\begin{tikzpicture}

\node [mybox] (box){%

\begin{minipage}{0.46\textwidth}

\begin{tabular}{lp{8cm} l}

Range: & $R = x_\text{largest} - x_\text{smallest}$\\

Variance: & $s^2 = \frac{\sum_{i=1}^{n}(x_i - \overline{x})^2}{n-1} = \frac{\sum_{i=1}^{n}x_i^2 - n\overline{x}^2}{n-1}$\\

Standard deviation: & $s = \sqrt{\frac{\sum_{i=1}^{n}(x_i - \overline{x})^2}{n-1}} = \sqrt{\frac{\sum_{i=1}^{n}x_i^2 - n\overline{x}^2}{n-1}}$\\

IQR: & $\text{IQR} = \text{Q3} - \text{Q1}$

\end{tabular}\\

\textbf{Method to compute $\text{Q}_{(p)}$:}

\begin{itemize}

\setlength\itemsep{0em}

\item Sort data from smallest to largest: $x_{(1)} \leq x_{(2)} \leq ... \leq x_{(n)}$

\item Compute the number $np + 0.5$

\item If $np + 0.5$ is an integer, $m$, then: $\text{Q}_{(p)} = x_{(m)}$

\item If $np + 0.5$ is not an integer, $m < np + 0.5 < m+1$ for some integer $m$, then:\\ $\text{Q}_{(p)} = \frac{x_{(m)}+x_{(m+1)}}{2}$

\end{itemize}

\textbf{Outliers:}

\begin{itemize}

\setlength\itemsep{0em}

\item Values smaller than $\text{Q1} - (1.5 \times \text{IQR})$ are outliers

\item Values greater than $\text{Q3} + (1.5 \times \text{IQR})$ are outliers

\end{itemize}

\end{minipage}

};

%------------ Measures of Variability Header ---------------------

\node[fancytitle, right=10pt] at (box.north west) {Measures of Variability};

\end{tikzpicture}

%------------ Discrete Random Variable and Distributions ---------------

\begin{tikzpicture}

\node [mybox] (box){%

\begin{minipage}{0.46\textwidth}

Consider a \textbf{discrete} random variable $X$ \\

\textbf{Probability Mass Function} (pmf): $f(x) = P(X = x)$

\begin{enumerate}

\setlength\itemsep{0em}

\item $f(x) \geq 0$ for all $x$ in $X$

\item $\sum_x f(x) = 1$

\end{enumerate}

\textbf{Cumulative Distributive Function} (cdf): $F(x) = P(X \leq x) = \sum_{k \leq x}f(k)$ \\

\begin{tabular}{lp{8cm} l}

Mean ($\mu$): & $E(X) = \sum_{x}xf(x)$ \\

Expected value: & $E(g(X)) = \sum_{x}g(x)f(x)$ \\

Variance ($\sigma^2$): & $Var(X) = \sum_x (x-\mu)^2f(x) = E(X^2)-[E(X)]^2$\\

SD ($\sigma$): & $SD(X) = \sqrt{Var(X)}$

\end{tabular} \\

\\

\end{minipage}

};

%------------ Discrete Random Variable and Distributions Header ---------------------

\node[fancytitle, right=10pt] at (box.north west) {Discrete Random Variables};

\end{tikzpicture}

%------------ Sets and Probability ---------------

\begin{tikzpicture}

\node [mybox] (box){%

\begin{minipage}{0.46\textwidth}

\textbf{Properties of Probability:}

\begin{itemize}

\setlength\itemsep{0em}

\item \textit{General Addition Rule:} $P(A \cup B) = P(A) + P(B) - P(A \cap B)$

\item \textit{Complement Rule:} $P(A^c) = 1 - P(A)$

\item If $A \subseteq B$ then $P(A \cap B) = P(A)$

\item If $A \subseteq B$ then $P(A) \leq P(B)$

\item $P(\emptyset) = 0$ and $P(S) = 1$

\item $0 \leq P(A) \leq 1$ for all $A$

\end{itemize}

\textbf{Conditional Probability:}

\begin{itemize}

\setlength\itemsep{0em}

\item $P(A|B) = \frac{P(A \cap B)}{P(B)}$ and $P(B|A) = \frac{P(A \cap B)}{P(A)}$

\item \textit{Multiplication Rule:} $P(A \cap B) = P(B) \times P(A|B)$ and \\ $P(A \cap B) = P(A) \times P(B|A)$

\item Events $A$ and $B$ are \textbf{independent} if and only if $P(A \cap B) = P(A)P(B)$ \\ and thus $P(A|B) = P(A)$ and $P(B|A) = P(B)$

\end{itemize}

\begin{tabular}{l l l}

\textbf{Bayes' Theorem:}

& $P(A_i|B)$ &$= \frac{P(B|A_i)P(A_i)}{\sum_{i=1}^{n}P(A_i)P(B|A_i)}$\\

&& $= \frac{P(B|A_i)P(A_i)}{P(B|A_1)P(A_1) + P(B|A_2)P(A_2)+ . . . + P(B|A_n)P(A_n)}$\\

\end{tabular}

\end{minipage}

};

%------------ Sets and Probability Header ---------------------

\node[fancytitle, right=10pt] at (box.north west) {Sets and Probability};

\end{tikzpicture}

%------------ Continuous Random Variable and Distributions ---------------

\begin{tikzpicture}

\node [mybox] (box){%

\begin{minipage}{0.46\textwidth}

Consider a \textbf{continuous} random variable $X$ \\

\textbf{Probability Density Function} (pdf): $P(a \leq X \leq b) = \int_{a}^{b} f(x) dx$

\begin{enumerate}

\setlength\itemsep{0em}

\item $f(x) \geq 0$ for all $x$

\item $\int^{\infty}_{-\infty} f(x) dx = 1$

\end{enumerate}

\textbf{Cumulative Distributive Function} (cdf): $F(x) = P(X \leq x) = \int_{-\infty}^{x} f(t) dt$ \\

\begin{tabular}{lp{8cm} l}

Median: & $x$ such that $F(x) = 0.5$ \\

$Q_1$ and $Q_3$: & $x$ such that $F(x) = 0.25$ and $x$ such that $F(x) = 0.75$\\

Mean ($\mu$): & $E(X) = \int^{\infty}_{-\infty} xf(x) dx$ \\

Expected value: & $E(g(X)) = \int^{\infty}_{-\infty} g(x)f(x) dx$ \\

Variance ($\sigma^2$): & $Var(X) = \int^{\infty}_{-\infty} (x-\mu)^2f(x) dx = E(X^2)-[E(X)]^2$\\

SD ($\sigma$): & $SD(X) = \sqrt{Var(X)}$

\end{tabular} \\

\end{minipage}

};

%------------ Continuous Random Variable and Distributions Header ---------------------

\node[fancytitle, right=10pt] at (box.north west) {Continuous Random Variables};

\end{tikzpicture}

%------------ Summarizing Main Features of f(x) ---------------

\begin{tikzpicture}

\node [mybox] (box){%

\begin{minipage}{0.46\textwidth}

Consider two random variables $X, Y$\\

\textbf{Properties of Probability:}

\begin{itemize}

\setlength\itemsep{0em}

\item $E(aX+b) = aE(X)+b$, for $a, b \in \mathbb{R}$

\item $E(X+Y)=E(X)+E(Y)$, for all pairs of $X$ and $Y$

\item $E(XY) = E(X)E(Y)$, for independent $X$ and $Y$

\item $Var(aX+b) = a^2Var(X)$, for $a, b \in \mathbb{R}$

\item $Var(X+Y) = Var(X) + Var(Y)$\\

$Var(X-Y) = Var(X) + Var(Y)$, for independent $X$ and $Y$

\end{itemize}

\textbf{Covariance:}

\begin{itemize}

\setlength\itemsep{0em}

\item $\text{Cov}(X,Y) = E{[X-E(X)][Y-E(Y)]} = E(XY)-E(X)E(Y)$\\

If $X$ and $Y$ are independent, $Cov(X,Y) = 0$

\item $Var(X+Y) = Var(X)+Var(Y)+2Cov(X,Y)$

\item $Var(aX+bY+c) = a^2Var(X)+b^2Var(Y)+2abCov(X,Y)$

\end{itemize}

\end{minipage}

};

%------------ Summarizing Main Features of f(x) ---------------------

\node[fancytitle, right=10pt] at (box.north west) {Summarizing Main Features of $f(x)$};

\end{tikzpicture}

%------------ Sum and Average of Independent Random Variables ---------------

\begin{tikzpicture}

\node [mybox] (box){%

\begin{minipage}{0.46\textwidth}

\textbf{Sum of Independent Random Variables:}\\

$Y = a_1X_1+a_2X_2+...+a_nX_n$, for $a_1, a_2,..., a_n \in \mathbb{R}$

\begin{itemize}

\setlength\itemsep{0em}

\item $E(Y) = a_1E(X_1)+a_2E(X_2)+...+a_nE(X_n)$

\item $Var(Y) = a_1^2Var(X_1) + a_2^2Var(X_2) + ... + a_n^2Var(X_n)$

\end{itemize}

If $n$ random variables $X_i$ have common mean $\mu$ and common variance $\sigma^2$ then,

\begin{itemize}

\setlength\itemsep{0em}

\item $E(Y) = (a_1 + a_2 + ... + a_n)\mu$

\item $Var(Y) = (a_1^2 + a_2^2 + ... + a_n^2)\sigma^2$

\end{itemize}

\textbf{Average of Independent Random Variables:}\\

$X_1,X_2,...,X_n$ are $n$ independent random variables

\begin{itemize}

\setlength\itemsep{0em}

\item $\overline{X} = \frac{X_1+X_2+...+X_n}{n}$

\item $E[\overline{X}] = \frac{1}{n}[E(X_1)+E(X_2)+...+E(X_n)]$

\item $Var[\overline{X}] = \frac{1}{n^2}[Var(X_1)+Var(X_2)+...+Var(X_n)]$

\end{itemize}

If $n$ random variables $X_i$ have common mean $\mu$ and common variance $\sigma^2$ then,

\begin{itemize}

\setlength\itemsep{0em}

\item $E[\overline{X}] = \mu$

\item $Var[\overline{X}] = \frac{\sigma^2}{n}$

\end{itemize}

\end{minipage}

};

%------------ Sum and Average of Independent Random Variables ---------------------

\node[fancytitle, right=10pt] at (box.north west) {Sum and Average of Independent Random Variables};

\end{tikzpicture}

%------------ Maximum and Minimum of Independent Variables ---------------

\begin{tikzpicture}

\node [mybox] (box){%

\begin{minipage}{0.46\textwidth}

Given $n$ independent random variables $X_1, X_2,...,X_n$.\\

For each $X_i$, cdf $F_X(x)$ and pdf is $f_X(x)$.\\

\textbf{Maximum of Independent Random Variables:}\\

Consider $V=max\{X_1, X_2, ... , X_n\}$\\

\underline{cdf of V}\\

\begin{tabular}{lp{12cm} l}

$F_V(v)$& $= P(V \leq v) = P(X_1 \leq v, X_2 \leq v,..., X_n \leq v)$\\

& $= P(X_1 \leq v)P(X_2 \leq v)...P(X_n \leq v) = F_{X_1}(v)F_{X_2}(v)...F_{X_n}(v)$\\

& $= [F_X(v)]^n$ ; if $X_i$'s are all identically distributed

\end{tabular}\\

\underline{pdf of V}\\

\begin{tabular}{lp{12cm} l}

$f_V(v)$& $= F_V'(v) = \frac{d}{dv}F_V(v) = \frac{d}{dv}[F_X(v)]^n = n[F_X(v)]^{n-1}\frac{d}{dv}F_X(v)$\\

& $= n[F_X(v)]^{n-1}f_X(v)$

\end{tabular}\\\\

\textbf{Minimum of Independent Random Variables:}\\

Consider $U=min\{X_1, X_2, ... , X_n\}$\\

\underline{cdf of U}\\

\begin{tabular}{lp{12cm} l}

$F_U(u)$& $= P(U \leq u) = 1-P(U>u) = 1-P(X_1>u, X_2>u,...,X_n>u)$\\

& $= 1-P(X_1>u)P(X_2>u)...P(X_n>u)$\\

& $= 1-[1-F_{X_1}(u)][1-F_{X_2}(u)]...[1-F_{X_n}(u)]$\\

& $= 1-[1-F_X(u)]^n$ ; if $X_i$'s are all identically distributed

\end{tabular}\\

\underline{pdf of U}\\

\begin{tabular}{lp{12cm} l}

$f_U(u)$& $= F_U'(u) = \frac{d}{du}\{1-[1-F_X(u)]^2\} = 0 - n[1-F_X(u)]^{n-1}\frac{d}{du}(-F_X(u))$\\

& $= n[1-F_X(u)]^{n-1}f_X(u)$

\end{tabular}

\end{minipage}

};

%------------ Maximum and Minimum of Independent Variables ---------------------

\node[fancytitle, right=10pt] at (box.north west) {Maximum and Minimum of Independent Variables};

\end{tikzpicture}

%------------ Some Continuous Distributions ---------------

\begin{tikzpicture}

\node [mybox] (box){%

\begin{minipage}{0.46\textwidth}

\textbf{Uniform Distribution:} $X \sim U(a, b)$\\

\begin{tabular}{lp{8cm} l}

Mean: &$\mu = E(X) = \frac{a+b}{2}$ \\

Variance: &$\sigma^2$ = $Var(X) = \frac{(b-a)^2}{12}$\\

\end{tabular}

\begin{multicols*}{2}

\underline{pdf of X}\\

$f(x)=$

$\begin{cases}

\frac{1}{b-a} & a \leq x \leq b\\

0 & \textrm{otherwise} \\

\end{cases}$\\

\columnbreak

\underline{cdf of X}\\

$F(x)=$

$\begin{cases}

0 & x < a\\

\frac{x-a}{b-a} & a \leq x \leq b\\

1 & x > b

\end{cases}$

\end{multicols*}

\textbf{Exponential Distribution:} $X \sim Exp(\lambda)$\\

\begin{tabular}{lp{8cm} l}

Mean: &$\mu = E(X) = \frac{1}{\lambda}$ \\

Variance: &$\sigma^2$ = $Var(X) = \frac{1}{\lambda^2}$\\

\end{tabular}

\begin{multicols*}{2}

\underline{pdf of X}\\

$f(x)=$

$\begin{cases}

\lambda e^{-\lambda x} & x \geq 0\\

0 & x < 0\\

\end{cases}$\\

\columnbreak

\underline{cdf of X}\\

$F(x)=$

$\begin{cases}

1 - e^{-\lambda x} & x \geq 0\\

0 & x < 0

\end{cases}$

\end{multicols*}

\end{minipage}

};

%------------ Some Continuous Distributions Header ---------------------

\node[fancytitle, right=10pt] at (box.north west) {Some Continuous Distributions};

\end{tikzpicture}

%------------ Normal Distribution ---------------

\begin{tikzpicture}

\node [mybox] (box){%

\begin{minipage}{0.46\textwidth}

\textbf{Normal Distribution:} $X \sim N(\mu, \sigma^2)$\\

Standardized Normal: $Z \sim N(0, 1)$ where $Z = \frac{X-\mu}{\sigma}$\\

\underline{68-95-99.7 Rule:}

\begin{itemize}

\setlength\itemsep{0em}

\item approximately 68\% of observations fall within $\sigma$ of $\mu$

\item approximately 95\% of observations fall within $2\sigma$ of $\mu$

\item approximately 99.7\% of observations fall within $3\sigma$ of $\mu$

\end{itemize}

\end{minipage}

};

%------------ Normal Distribution Header ---------------------

\node[fancytitle, right=10pt] at (box.north west) {Normal Distribution};

\end{tikzpicture}

%------------ Bernoulli and Binomial Random Variables ---------------

\begin{tikzpicture}

\node [mybox] (box){%

\begin{minipage}{0.46\textwidth}

\textbf{Bernoulli Random Variable:}\\

Bernoulli random variable $X$ has only two outcomes, success and failure.\\

$P(Success) = p$ and $P(Failure) = 1 - p$\\

\textbf{Bernoulli Distribution:}\\

$X \sim Bernoulli(p)$\\

\underline{pmf}: $P(X=x) = p^x(1-p)^{1-x}$ for $x=0,1$\\

\begin{tabular}{lp{8cm} l}

Mean: &$\mu = E(X) = p$ \\

Variance: &$\sigma^2 = Var(X) = p(1-p)$\\

\end{tabular}\\

\textbf{Binomial Random Variable:}\\

Binomial random variable $X$ is the number of successes for $n$ independent trials and each trial has the same probability of success $p$.\\

\textbf{Binomial Distribution:}\\

$X \sim Bin(n, p)$\\

\underline{pmf}: $P(X=x) = {n \choose x} p^x(1-p)^{n-x}$ for $x=0,1,2,...,n$\\

\underline{cdf}: $P(X\leq x) = \sum_{i=0}^{x} {n \choose i} p^i(1-p)^{n-i}$ for $x=0,1,2,...,n$\\

\textit{Note:} ${n \choose x} = \frac{n!}{x!(n-x)!}$\\

\begin{tabular}{lp{8cm} l}

Mean: &$\mu = E(X) = np$ \\

Variance: &$\sigma^2 = Var(X) = np(1-p)$\\

\end{tabular}

\end{minipage}

};

%------------ Bernoulli and Binomial Random Variables Header ---------------------

\node[fancytitle, right=10pt] at (box.north west) {Bernoulli and Binomial Random Variables};

\end{tikzpicture}

%------------ Geometric Distribution and Return Period ---------------

\begin{tikzpicture}

\node [mybox] (box){%

\begin{minipage}{0.46\textwidth}

\textbf{Geometric Random Variable:}\\

Geometric random variable X is the number of independent trials needed until the first success occurs.\\

\textbf{Geometric Distribution:}\\

$X \sim Geo(p)$ where $p$ is the probability of success\\

\underline{pmf}: $P(X=x)=p(1-p)^{x-1}$ for $x=1,2,3,...$\\

\underline{cdf}: $P(X \leq x)=1-(1-p)^x$ for $x=1,2,3,...$\\

\begin{tabular}{lp{8cm} l}

Mean: &$\mu = E(X) = \frac{1}{p}$ \\

Variance: &$\sigma^2 = Var(X) = \frac{1-p}{p^2}$\\

\end{tabular}\\

\end{minipage}

};

%------------ Geometric Distribution Header ---------------------

\node[fancytitle, right=10pt] at (box.north west) {Geometric Distribution};

\end{tikzpicture}

%------------ Poisson Distribution ---------------

\begin{tikzpicture}

\node [mybox] (box){%

\begin{minipage}{0.46\textwidth}

\textbf{Poisson Process:}\\

Random variable X is the number of occurrences in a given interval.\\

\textbf{Poisson Distribution:}\\

$X \sim Poisson(\lambda)$ where $\lambda$ is the rate of occurrences\\

\underline{pmf}: $P(X=x)=\frac{\lambda^x e^{-\lambda}}{x!}$ for $x=0,1,2,3,...$\\

\underline{cdf}: $P(X\leq x)=\sum_{i=0}^{x}\frac{\lambda^i e^{-\lambda}}{i!}$ for $x=0,1,2,3,...$\\

\begin{tabular}{lp{8cm} l}

Mean: &$\mu = E(X) = \lambda$ \\

Variance: &$\sigma^2 = Var(X) = \lambda$\\

\end{tabular}

\begin{itemize}

\setlength\itemsep{0em}

\item Let $T \sim Exp(\lambda)$ be the time between two consecutive occurrences of events. (Can also be the waiting time for first event.)

\end{itemize}

\end{minipage}

};

%------------ Poisson Distribution Header ---------------------

\node[fancytitle, right=10pt] at (box.north west) {Poisson Distribution};

\end{tikzpicture}

%------------ Poisson Approximation to the Binomial Distribution ---------------

\begin{tikzpicture}

\node [mybox] (box){%

\begin{minipage}{0.46\textwidth}

Let $X \sim Bin(n, p)$ be a binomial random variable. If $n$ is large $(n \geq 20)$ and $p$ or $1-p$ is small ($np<5$ or $n(1-p)<5$), then we can use a Poisson random variable with rate $\lambda = np$ to approximate the probabilistic behaviour of $X$.\\\\

$X \sim Poisson(np)$, approx. for $x=0,1,2,...n$

\end{minipage}

};

%------------ Poisson Approximation to the Binomial Distribution Header ---------------------

\node[fancytitle, right=10pt] at (box.north west) {Poisson Approximation to the Binomial Distribution};

\end{tikzpicture}

%------------ Central Limit Theorem ---------------

\begin{tikzpicture}

\node [mybox] (box){%

\begin{minipage}{0.46\textwidth}

Let $X_1$, $X_2$,..., $X_n$ be a random sample from an arbitrary population/distribution with mean $\mu$ and variance $\sigma^2$. When $n$ is large ($n \geq 20$) then\\\\

$\overline{X} = \frac{X_1 + X_2 + ... + X_n}{n} \sim N(\mu, \frac{\sigma^2}{n})$, approx.\\\\

When dealing with sum, the CLT can still be used. Then\\\\

$T = X_1 + X_2 + ... + X_n = n\overline{X}$ \\

$T \sim N(n\mu, n\sigma^2)$, approx.

\end{minipage}

};

%------------ Central Limit Theorem Header ---------------------

\node[fancytitle, right=10pt] at (box.north west) {Central Limit Theorem};

\end{tikzpicture}

%------------ Normal Approximation to the Binomial Distribution ---------------

\begin{tikzpicture}

\node [mybox] (box){%

\begin{minipage}{0.46\textwidth}

Let $X \sim Bin(n,p)$. When $n$ is large so that both $np \geq 5$ and $n(1-p) \geq 5$. We can use the normal distribution to get an approximate answer. Remember to use \textbf{continuity correction}.\\\\

$X \sim N(np, np(1-p))$, approx.

\end{minipage}

};

%------------ Normal Approximation to the Binomial Distribution Header ---------------------

\node[fancytitle, right=10pt] at (box.north west) {Normal Approximation to the Binomial Distribution};

\end{tikzpicture}

%------------ Normal Approximation to the Poisson Distribution ---------------

\begin{tikzpicture}

\node [mybox] (box){%

\begin{minipage}{0.46\textwidth}

Let $X \sim Poisson(\lambda)$. When $\lambda$ is large ($\lambda \geq 20$) then the Normal distribution can be used to approximate the Poisson distribution. Remember to use \textbf{continuity correction}.\\\\

$X \sim N(\lambda, \lambda)$, approx.

\end{minipage}

};

%------------ Normal Approximation to the Poisson Distribution Header ---------------------

\node[fancytitle, right=10pt] at (box.north west) {Normal Approximation to the Poisson Distribution};

\end{tikzpicture}

%------------ Continuity Correction ---------------

\begin{tikzpicture}

\node [mybox] (box){%

\begin{minipage}{0.46\textwidth}

Consider continuous random variable $Y$ and discrete random variable $X$.

\begin{itemize}

\setlength\itemsep{0em}

\item $P(X > 4) = P(X \geq 5) = P(Y \geq 4.5)$

\item $P(X \geq 4) = P(Y \geq 3.5)$

\item $P(X < 4) = P(X \leq 3) = P(Y \leq 3.5)$

\item $P(X \leq 4) = P(Y \leq 4.5)$

\item $P(X = 4) = P(3.5 \leq Y \leq 4.5)$

\end{itemize}

\end{minipage}

};

%------------ Continuity Correction Header ---------------------

\node[fancytitle, right=10pt] at (box.north west) {Continuity Correction};

\end{tikzpicture}

%------------ Point Estimators ---------------

\begin{tikzpicture}

\node [mybox] (box){%

\begin{minipage}{0.46\textwidth}

Suppose that $X_1$, $X_2$,..., $X_n$ are random samples from a population with mean $\mu$ and variance $\sigma^2$.

\begin{itemize}

\setlength\itemsep{0em}

\item $\overline{x}$ is an unbiased estimator of $\mu$\\

$\overline{x} = \frac{\sum_{i=1}^{n}x_i}{n}$

\item $s^2$ is an unbiased estimator of $\sigma^2$\\

$s^2 = \frac{\sum_{i=1}^{n}(x_i-\overline{x})^2}{n-1} = \frac{\sum_{i=1}^{n}x_i^2-n\overline{x}^2}{n-1}$

\item $\theta$ is the parameter, $\hat{\theta}$ is the point estimator. When $E(\hat{\theta}) = \theta$, $\hat{\theta}$ is an unbiased estimator. The bias of an estimator is $bias(\theta) = E(\hat{\theta}) - \theta$.

\end{itemize}

\end{minipage}

};

%------------ Point Estimators Header ---------------------

\node[fancytitle, right=10pt] at (box.north west) {Point Estimators};

\end{tikzpicture}

%------------ Confidence Interval ---------------

\begin{tikzpicture}

\node [mybox] (box){%

\begin{minipage}{0.46\textwidth}

\textbf{$(1-\alpha)100\%$ Confidence Interval for population mean $\mu$:}\\ (point estimator of $\mu$ is $\overline{x}$)\\

\textit{General Form:} point estimate $\pm$ margin of error\\

When $\sigma^2$ is \textbf{known}: $\overline{x} \pm z_{\frac{\alpha}{2}}\frac{\sigma}{\sqrt{n}}$\\

When $\sigma^2$ is \textbf{unknown}:

$\overline{x} \pm t_{\frac{\alpha}{2}, n-1}\frac{s}{\sqrt{n}}$\\

Typical $z$ values of $\alpha$:\\

\begin{tabular}{lp{1cm} ll}

$\alpha = 0.1$ & $90\%$ & $z_{\frac{\alpha}{2}} = z_{0.05} = 1.645$\\

$\alpha = 0.05$ & $95\%$ & $z_{\frac{\alpha}{2}} = z_{0.025} = 1.96$\\

$\alpha = 0.01$ & $99\%$ & $z_{\frac{\alpha}{2}} = z_{0.005} = 2.575$\\

\end{tabular}\\\\

\textbf{$(1-\alpha)100\%$ Confidence Interval for $\mu_1 - \mu_2$:}\\

$(\overline{x}_1 - \overline{x}_2) \pm t_{\frac{\alpha}{2}, n_1+n_2-2}s_p\sqrt{\frac{1}{n_1}+\frac{1}{n_2}}$

\end{minipage}

};

%------------ Confidence Interval Header ---------------------

\node[fancytitle, right=10pt] at (box.north west) {Confidence Interval};

\end{tikzpicture}

%------------ Pooled Standard Deviation ---------------

\begin{tikzpicture}

\node [mybox] (box){%

\begin{minipage}{0.46\textwidth}

Requires assumptions that population variances are equal: $\sigma_1^2 = \sigma_2^2 = \sigma^2$\\

The pooled standard deviation $s_p$ estimates the common standard deviation $\sigma$.\\

$s_p = \sqrt{\frac{(n_1-1)s_1^2+(n_2-1)s_2^2}{n_1+n_2-2}}$

\end{minipage}

};

%------------ Pooled Standard Deviation Header ---------------------

\node[fancytitle, right=10pt] at (box.north west) {Pooled Standard Deviation};

\end{tikzpicture}

%------------ Testing of Hypotheses about mu ---------------

\begin{tikzpicture}

\node [mybox] (box){%

\begin{minipage}{0.46\textwidth}

$H_o$: Null hypothesis is a tentative assumption about a population parameter.\\

$H_a$: Alternative hypothesis is what the test is attempting to establish.

\begin{itemize}

\setlength\itemsep{0em}

\item $H_o: \mu \geq \mu_o$ vs $H_a: \mu < \mu_o$ (one-tail test, lower-tail)

\item $H_o: \mu \leq \mu_o$ vs $H_a: \mu > \mu_o$ (one-tail test, upper-tail)

\item $H_o: \mu = \mu_o$ vs $H_a: \mu \neq \mu_o$ (two-tail test)

\end{itemize}

\textbf{Test Statistic:}\\

Case 1: $\sigma^2$ is \underline{known}\\

$z=\frac{\overline{x}-\mu_o}{\frac{\sigma}{\sqrt{n}}} \sim N(0,1)$\\

Case 2: $\sigma^2$ is \underline{unknown}\\

$t=\frac{\overline{x}-\mu_o}{\frac{s}{\sqrt{n}}} \sim t_{n-1}$\\

\textbf{Type I and Type II errors:}\\

\begin{tabular}{lp{8cm} l}

Type I error: & rejecting $H_o$ when $H_o$ is true\\

Type II error: & not rejecting $H_o$ with $H_o$ is false\\

\end{tabular}\\

\begin{tabular}{lp{8cm} l}

$P(\textrm{Type I error}) = \alpha$\\

$P(\textrm{Type II error}) = \beta$\\

\end{tabular}\\\\

\textbf{Power} is the probability of rejecting $H_o$, when $H_o$ is false.\\

$\textrm{Power} = 1-\beta$\\

\textbf{Comparison of two means:}\\

Two independent populations with means $\mu_1$ and $\mu_2$.\\

Assume random samples, normal distributions, and equal variances ($\sigma_1^2 = \sigma_2^2$).

\begin{itemize}

\setlength\itemsep{0em}

\item $H_o: \mu_1 - \mu_2 \geq \Delta_o$ vs $H_a: \mu_1 - \mu_2 < \Delta_o$ (lower-tail)

\item $H_o: \mu_1 - \mu_2 \leq \Delta_o$ vs $H_a: \mu_1 - \mu_2 > \Delta_o$ (upper-tail)

\item $H_o: \mu_1 - \mu_2 = \Delta_o$ vs $H_a: \mu_1 - \mu_2 \neq \Delta_o$ (two-tail)

\end{itemize}

\textbf{Test Statistic:}\\

$t=\frac{(\overline{\chi}_1-\overline{\chi}_2)-\Delta_o}{s_p\sqrt{\frac{1}{n_1}+\frac{1}{n_2}}} \sim t_{n_1+n_2-2}$\\

$s_p$ is the pooled standard deviation\\

\textbf{Rejection Rules:}\\

Consider test statistic $z$, and significance value $\alpha$.

\begin{itemize}

\setlength\itemsep{0em}

\item Lower-tail test: Reject $H_o$ if $z \leq z_{\alpha}$

\item Upper-tail test: Reject $H_o$ if $z \geq z_{\alpha}$

\item Two-tail test: Reject $H_o$ if $|z| \geq z_{\frac{\alpha}{2}}$

\end{itemize}

\end{minipage}

};

%------------ Testing of Hypotheses about mu Header ---------------------

\node[fancytitle, right=10pt] at (box.north west) {Testing of Hypotheses about $\mu$};

\end{tikzpicture}

%------------ ANOVA ---------------

\begin{tikzpicture}

\node [mybox] (box){%

\begin{minipage}{0.46\textwidth}

\textbf{One-way ANOVA:}\\

$k = $ number of populations or treatments being compared\\

$\mu_1 = $ mean of population 1 or true average response when treatment 1 is applied.\\

...\\

$\mu_k = $ mean of population $k$ or true average response when treatment $k$ is applied.\\

\textbf{Assumptions:}

\begin{itemize}

\setlength\itemsep{0em}

\item For each population, response variable is normally distributed

\item Variance of response variable, $\sigma^2$ is the same for all the populations

\item The observations must be independent

\end{itemize}

\textbf{Hypotheses:}\\

$H_o: \mu_1 = \mu_2 = ... = \mu_k$\\

$H_a: \mu_i \neq \mu_j$ for $i \neq j$\\

\textbf{Notation:}\\

$y_{ij}$ is the $j^{th}$ observed value from the $i^{th}$ population/treatment.\\

\begin{tabular}{lp{8cm} l}

Total mean: & $\overline{y}_{i\cdot} = \frac{y_{i\cdot}}{n_i} = \frac{\sum_{j=1}^{n_i}y_{ij}}{n_i}$\\

Total sample size: & $n = n_1 + n_2 + ... + n_k$ \\

Grand total: & $y_{\cdot\cdot} = \sum_{i=1}^{k}\sum_{j=1}^{n_i}y_{ij}$\\

Grand mean: & $\overline{y}_{\cdot\cdot} = \frac{y_{\cdot\cdot}}{n} = \frac{\sum_{i=1}^{k}\sum_{j=1}^{n_i}y_{ij}}{n}$\\

\end{tabular}\\

$s^2 = \frac{\sum_{j=1}^{k}(n_i-1)s_i^2}{n-k}$ = MSE, where $s_i^2 = \frac{\sum_{j=1}^{n_i}(y_{ij}-\overline{y}_{i\cdot})^2}{n_i-1}$\\

\textbf{The ANOVA Table:}

\begin{center}

\begin{tabular}{c|c|c|c|c}

Source of Variation & df & Sum of Squares & Mean Square & F-ratio \\

\hline

Treatment & $k-1$ & SSTr & MSTr = $\frac{\textrm{SSTr}}{k-1}$ & $\frac{\textrm{MSTr}}{\textrm{MSE}}$ \\

Error & $n-k$ & SSE & MSE = $\frac{\textrm{SSE}}{n-k}$ & \\

Total & $n-1$ & SST & & \\

\end{tabular}

\end{center}

\begin{tabular}{lp{12cm} l}

SST &= SSTr + SSE\\\\

SST &= $\sum_{i=1}^{k}\sum_{j=1}^{n_i}(y_{ij}-\overline{y}_{\cdot\cdot})^2 = \sum_{i=1}^{k}\sum_{j=1}^{n_i}y_{ij}^2-\frac{1}{n}y_{\cdot\cdot}^2$\\

SSTr &= $\sum_{i=1}^{k}\sum_{j=1}^{n_i}(\overline{y}_{i\cdot}-\overline{y}_{\cdot\cdot})^2 = \sum_{i=1}^{k}\frac{1}{n_i}y_{i\cdot}^2-\frac{1}{n}y_{\cdot\cdot}^2$\\

SSE &= $\sum_{i=1}^{k}\sum_{j=1}^{n_i}(y_{ij}-\overline{y}_{i\cdot})^2= \sum_{i=1}^{k}\sum_{j=1}^{n_i}y_{ij}^2-\sum_{i=1}^{k}\frac{y_{i\cdot}^2}{n_i} = \sum_{i=1}^{k}(n_i-1)s_i^2$\\

\end{tabular}\\\\

\textbf{Test Statistic:}\\

\begin{tabular}{lp{8cm} l}

$F_{obs} = \frac{\textrm{MSTr}}{\textrm{MSE}} \sim F_{v_1, v_2}$ & $v1 = df(\textrm{SSTr}) = k-1$\\

& $v2 = df(\textrm{SSE}) = n-k$\\

Reject $H_o$ if $F_{obs} \geq F_{\alpha, v_1, v_2}$

\end{tabular}\\

\end{minipage}

};

%------------ ANOVA Header ---------------------

\node[fancytitle, right=10pt] at (box.north west) {Analysis of Variance (ANOVA)};

\end{tikzpicture}

%------------ Covariance and Correlation Coefficient ---------------

\begin{tikzpicture}

\node [mybox] (box){%

\begin{minipage}{0.46\textwidth}

On a scatter plot, each observation is represented as a point with x-coord $x_i$ and y-coord $y_i$.\\

\textbf{Sample Covariance:} $Cov(x, y)$\\

\begin{tabular}{lp{12cm} l}

$Cov(x,y)$ & $=\frac{1}{n-1}\sum_{i=1}^{n}(x_i-\overline{x})(y_i-\overline{y})$\\

& $=\frac{1}{n-1}[\sum_{i=1}^{n}x_iy_i-\frac{\sum_{i=1}^{n}x_i\sum_{i=1}^{n}y_i}{n}]$\\

& $=\frac{1}{n-1}[\sum_{i=1}^{n}x_iy_i-n\overline{x}\overline{y}]$

\end{tabular}\\

\begin{itemize}

\setlength\itemsep{0em}

\item If $x$ and $y$ are positively associated, then $Cov(x, y)$ will be large and positive

\item If $x$ and $y$ are negatively associated, then $Cov(x, y)$ will be large and negative

\item If the variables are not positively nor negatively associated, then $Cov(x,y)$ will be small

\end{itemize}

\textbf{Sample Correlation Coefficient:} $r$\\

$r = \frac{1}{n-1}\sum_{i=1}^{n}(\frac{x_i-\overline{x}}{s_x})(\frac{y_i-\overline{y}}{s_y})$, where $s_x = \sqrt{\frac{\sum_{i=1}^{n}(x_i-\overline{x})^2}{n-1}}$ and $s_y = \sqrt{\frac{\sum_{i=1}^{n}(y_i-\overline{y})^2}{n-1}}$\\

$r = \frac{Cov(x,y)}{s_x s_y}$

\begin{itemize}

\setlength\itemsep{0em}

\item Always falls between -1 and +1

\item A positive $r$ value indicates a positive association

\item A negative $r$ value indicates a negative association

\item $r$ value close to +1 or -1 indicates a strong linear association

\item $r$ value close to 0 indicates a weak association

\end{itemize}

\end{minipage}

};

%------------ Covariance and Correlation Coefficient Header ---------------------

\node[fancytitle, right=10pt] at (box.north west) {Covariance and Correlation Coefficient};

\end{tikzpicture}

%------------ Simple Linear Regression ---------------

\begin{tikzpicture}

\node [mybox] (box){%

\begin{minipage}{0.46\textwidth}

\textbf{Regression Line:}\\

Simple linear regression model: $y = \beta_o + \beta_1x+\varepsilon$\\

$\beta_o$, $\beta_1$, and $\sigma^2$ are parameters, $y$ and $\varepsilon$ are random variables. $\varepsilon$ is the error term.\\

True regression line: $E(y) = \beta_o + \beta_1x$\\

Least squares regression line: $\hat{y} = \hat{\beta_o} + \hat{\beta_1}x$\\

$\hat{y}$, $\hat{\beta_o}$, and $\hat{\beta_1}$ are point estimates for $y$, $\beta_o$, and $\beta_1$.\\

Residual: $\varepsilon_i = y_i - \hat{y_i}$\\

$\hat{\beta_1} = \frac{\sum_{i=1}^{n}(x_i-\overline{x})(y_i-\overline{y})}{\sum_{i=1}^{n}(x_i-\overline{x})^2} = \frac{\sum_{i=1}^{n}x_iy_i-n\overline{x}\overline{y}}{\sum_{i=1}^{n}x_i^2-n\overline{x}^2} = r\frac{s_y}{s_x}$\\

$\hat{\beta_o} = \frac{\sum_{i=1}^{n}y_i-\hat{\beta_1}\sum_{i=1}^{n}x_i}{n} = \overline{y} - \hat{\beta_1}\overline{x}$\\

\textbf{Coefficient of Determination:} $r^2$\\

The proportion of observed $y$ variation that can be explained by the simple linear regression model.

\end{minipage}

};

%------------ Simple Linear Regression Header ---------------------

\node[fancytitle, right=10pt] at (box.north west) {Simple Linear Regression};

\end{tikzpicture}

%------------ Estimating Variance ---------------

\begin{tikzpicture}

\node [mybox] (box){%

\begin{minipage}{0.46\textwidth}

$\hat{\sigma}^2 = s^2 = \frac{\textrm{SSE}}{n-2} = \frac{\sum_{i=1}^{n}(y_i - \hat{y_i})^2}{n-2}$\\

\textbf{Error Sum of Squares} (SSE):\\

SSE = $\sum_{i=1}^{n}\varepsilon_i^2 = \sum_{i=1}^{n}(y_i - \hat{y_i})^2 = \sum_{i=1}^{n}[y_i-(\hat{\beta_o}+\hat{\beta_1}x_i)]^2$\\

SSE is a measure of variation in $y$ left unexplained by linear regression model.\\

\textbf{Total Sum of Squares} (SST):\\

SST = $\sum_{i=1}^{n}(y_i-\overline{y})^2$\\

SST is sum of squared deviations about sample mean of observed $y$ values.\\

\textbf{Regression Sum of Squares} (SSR):\\

SSR = $\sum_{i=1}^{n}(\hat{y_i}-\overline{y})^2$\\

SSR is total variation explained by the linear regression model. \\

SST = SSR + SSE\\

\textbf{Coefficient of Determination from SST, SSR, and SSE}:\\

$r^2 = 1 - \frac{SSE}{SST}$\\

or\\

$r^2 = \frac{SSR}{SST}$

\end{minipage}

};

%------------ Estimating Variance Header ---------------------

\node[fancytitle, right=10pt] at (box.north west) {Estimating $\sigma^2$ (SLR)};

\end{tikzpicture}

\vfill\null

\columnbreak

%------------ Slope Parameter ---------------

\begin{tikzpicture}

\node [mybox] (box){%

\begin{minipage}{0.46\textwidth}

When $\beta_1 = 0$ there is no linear relationship between the two variables.\\

\textbf{Hypotheses:}\\

$H_o: \beta_1 = 0$\\

$H_a: \beta_1 \neq 0$\\

\textbf{Test statistic:}\\

$t_{\textrm{obs}} = \frac{\hat{\beta_1} }{s_{\hat{\beta_1}}} \sim t_{n-2}$, where $s_{\hat{\beta_1}} = \frac{s}{s_x\sqrt{n-1}}$\\\\

Reject $H_o$ if $|t_{\textrm{obs}}| \geq t_{\frac{\alpha}{2},{n-2}}$\\

\textbf{$(1-\alpha)100\%$ Confidence Interval for $\beta_1$:}\\

$\hat{\beta_1} \pm t_{\frac{\alpha}{2}, n-2} s_{\hat\beta_1}$

\end{minipage}

};

%------------ Slope Parameter Header ---------------------

\node[fancytitle, right=10pt] at (box.north west) {Slope Parameter $\beta_1$ (SLR)};

\end{tikzpicture}

\end{multicols*}

\end{document}

Contact GitHub API Training Shop Blog About

© 2016 GitHub, Inc. Terms Privacy Security Status Help